Self-supervised learning through the eyes of a child

Orhan et al., 2020

Source: Orhan et al., 2020

Source: Orhan et al., 2020Summary

- How much of early knowledge in children is explained by learning versus innate inductive biases?

- Specifically for the development of high-level visual categories

- Apply recent self-supervised learning methods to longitudinal, egocentric video from young children

- Results in high-level visual representations

- Links: [ website ] [ pdf ]

Background

- Experimental evidence suggests that young children already have a sophisticated understanding about the world

- Leaves open the old “nature vs. nurture” question

- While advances in self-supervised learning have demonstrated the ability to learn powerful visual representations without additional supervision, they have not been applied to developmentally realistic, longitudinal, egocentric video

- Prior work (Bambach et al., 2018) shows that naturalistic data collected by toddlers have unique characteristics

Methods

- SAYCam Dataset

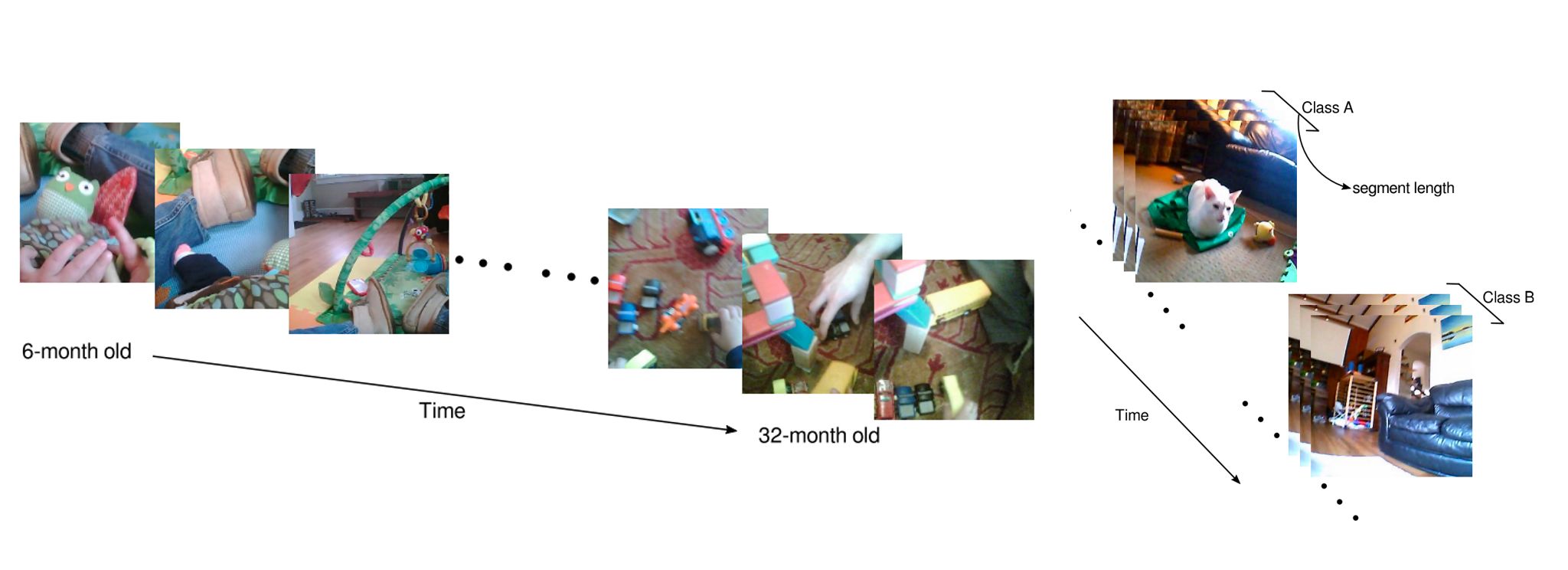

- Approximately 500 hours of video, split across three children, from head-mounted cameras

- Collected over a two year period (6-32 months), with 1-2 hours of recording per week

- Use MobileNetV2 and train using self-supervised algorithms on headcam videos, then freeze trunk and train linear readouts for downstream classification

- Temporal classification: based on principle of temporal invariance (higher level variables change more slowly)

- Divides entire dataset into finite number of temporal classes of equal duration, predict which episode a given frame belongs to

- Best model used 5fps sampling rate, 288s segment length, and color and grayscale data augmentations

- Static contrastive learning: momentum contrast (MoCo) objective

- Temporal contrastive learning: also use each frame’s two immediate neighbors as positive examples

- Baselines: random weights, ImageNet pre-trained, HOG features

- Temporal classification: based on principle of temporal invariance (higher level variables change more slowly)

Results

- Evaluate on downstream classification tasks:

- Curated, labeled subset from SAYCam, Child S

- Toybox dataset: 12 categories, 30 exemplars in each, with 10 different transformations – closer to SAYCam than say ImageNet

- Reduce correlations between train-test split by subsampling 10x in SAYCam, and holding out exemplars in Toybox

- Results

- Temporal classification performed better than the two contrastive learning objectives

- For Toybox with exemplar split, pre-trained ImageNet did the best by far and temporal classification was closer to the contrastive learning methods

- For SAYCam, temporal classification on Child S data was able to outperform pre-trained ImageNet – possibly a little bit of overfitting

- Random weights and HOG performed significantly worse

Conclusion

- Uses whole dataset at once, doesn’t respect the timescale that the data is acquired

- Could implement some sort of curriculum where the portion of data used shifts as training progresses

- Missing interactive learning, but might not be that important for object classification compared to say intuitive physics

- Would be interesting to see at which point higher sampling rate and segment lengths start to hurt performance

- While SAYCam is probably the best dataset to-date for these experiments, it is a very small fraction of the total experience (~1%)

- Not really clear why temporal classification performs the best

- Classification of frames at the boundary between episodes would seem kind of arbitrary

- Could imagine some continuum between temporal classification and temporal contrastive learning, where the “positiveness” of frames decreases as their temporal separation increases