ImageNet-trained CNNs are biased towards texture; increasing shape bias improves accuracy and robustness

Geirhos et al., 2019

Source: Geirhos et al., 2019

Source: Geirhos et al., 2019Summary

- CNNs are thought to recognize objects based on increasingly complex shape representations, but recent evidence suggests the importance of textures

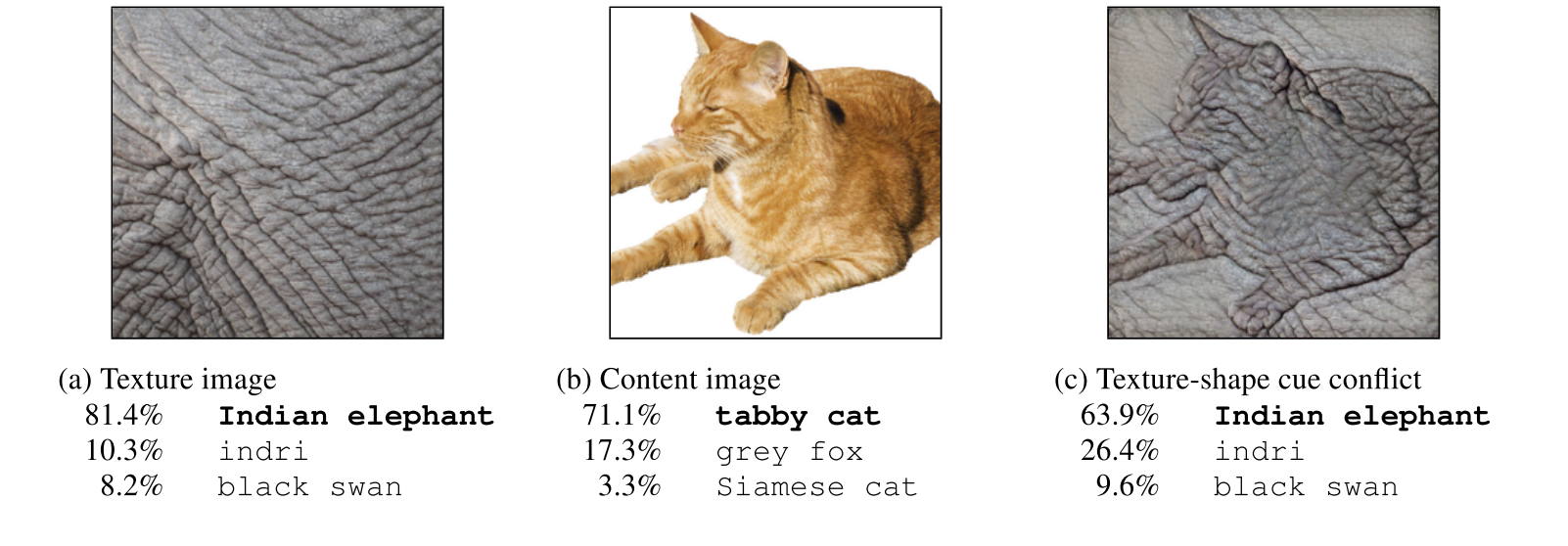

- Evaluate CNNs and humans on images with texture-shape cue conflict

- Show that ImageNet-trained CNNs are biased towards recognizing textures versus shapes, in contrast to humans

- Training CNNs on “Stylized-ImageNet” improves shape representation, better matches human behavior, and improves object detection performance

- Links: [ website ] [ pdf ]

Background

- Object shape is the most important cue for human object recognition, surpassing others like size or texture (Landau et al., 1988)

- Shape Hypothesis: CNNs combine low-level features into complex shapes until object can be classified

- Texture Hypothesis: Object textures are more important than shapes for CNN object recognition

- Shape Hypothesis contradicts some empirical findings that demonstrate the importance of texture in object recognition

- CNNs can classify texturized images even when global shape structure is destroyed

- Images with texture-shape cue conflict enables quantification of biases in CNNs and humans

Methods

- Psychophysics Experiments: fixation, 300ms stimulus presentation, pink noise backward masking, choose one of 16 categories

- Four CNNs pre-trained on ImageNet: AlexNet, GoogLeNet, VGG16, and ResNet-50

- Main Datasets: Only used object and texture images that were correctly classified by all four CNNs

- Original: 160 natural color images of objects (10 per category) with white background

- Greyscale: Original images converted to greyscale

- Silhouette: Original images showing black object on white background

- Edges: *Original images with Canny edge extractor applied

- Texture: 48 natural color images of textures (3 per category) – full-width patches of animals or many repetitions of the same object

- Cue Conflict: 1280 images (80 per category) by applying iterative style transfer between an image from Texture (as style) and Original (as content)

- Stylized-ImageNet (SIN): apply AdaIN style transfer to each ImageNet (IN) image using Kaggle’s Painter by Numbers (79,434 paintings) dataset for style images

Results

- Original, Texture, and Greyscale images were generally recognized equally well by both CNNs and humans

- However, for Silhouette and Edges images with little to no texture information, CNNs performed much worse than humans

- CNN performance on edges was significantly worse than CNNs on silhouette, and also humans on edges

- Rank order of CNN performance was roughly reversed between silhouette and edges, with ResNet-50 (the best model on ImageNet) doing the best on silhouette and worst on edges

- For Cue Conflict images, looking at only correct decisions – a substantial fraction of images were hard to recognize for both humans and CNNs

- Humans show a strong bias towards shape (~95%)

- CNNs show a clear bias for texture (~50-80%)

- Stylized-ImageNet:

- Poor performance on SIN by BagNets, which have limited maximum receptive field size, indicating that texture features are not sufficient (as designed and expected)

- SIN is a slightly harder task than IN (79% vs 92.9% top-5 accuracy)

- SIN transfers well to IN, but not vice versa

- SIN trained ResNet-50 has stronger shape bias compared to IN trained (81% vs 22%)

- Jointly training on SIN + IN and fine-tuning on IN improves classification (IN) and transfer learning (Pascal VOC) performance

Conclusion

- It’s possible that the restriction of images to ones that all models classify correctly actually confounds the cue conflict results

- Equal performance by a given model on object and texture images, but not a ceiling, might be better

- Also, there’s a continuum of salience between texture and shape for cue conflict images, how do we quantify and explicitly account for this

- Is the emergence of texture bias in ImageNet-trained CNNs a result of the data or architecture?

- I.e. Is ImageNet more easily solvable by texture cues than “real data”? If not, then why do humans develop a shape bias?

- Or is it that current CNN architectures and learning rules result in texture representation over shape?

- Cue Conflict images are inherently unnatural