High Fidelity Video Prediction with Large Stochastic Recurrent Neural Networks

Villegas et al., 2019

Source: Villegas et al., 2019

Source: Villegas et al., 2019Summary

- Addresses whether specialize architectures are needed for video prediction

- Investigates the performance of video prediction model as network capacity increases

- Demonstrates that scaling up model size improves prediction accuracy

- Links: [ website ] [ pdf ]

Background

- Learning accurate predictive models of the (visual) world remains a crucial, yet challenging, problem

- Some prior work towards solving this problem use multi-modal sensory streams, specialized computations (e.g. optical flow), or additional high-level information (e.g. landmarks, segmentations)

- Increasing model capacity has commonly been shown to improve performance across various domains

- Possibly because it better leverages the benefits of learning

Methods

- Use Stochastic Video Generation (SVG) as base architecture, since it only uses standard neural network layers

- Change encoder-decoder to only have convolutional layers for more detailed reconstruction since bottleneck is larger

- Use convolutional LSTM instead of fully-connected LSTM

- Use

- Scale up number of neurons in encoder-decoder by factor of

Results

- Baselines:

- LSTM: remove stocahstic component

- CNN: remove stocahstic component and LSTM component

- Datasets:

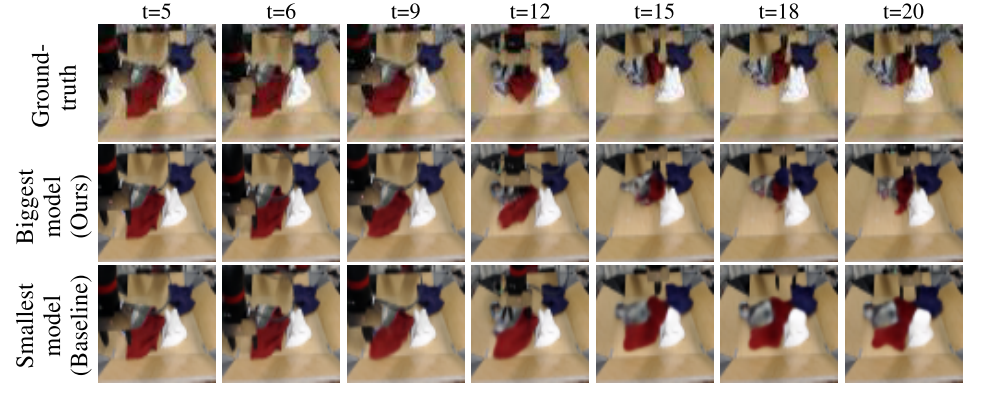

- Action-conditioned towel pick: robot arm is interacting with towels

- Human 3.6M: humans performing actions inside a room (e.g. walking, sitting)

- KITTI: driving dataset with partial observability

- Metrics:

- Frame-wise:

- Peak Signal-to-Noise (PSNR): measures exact pixel match

- Structural Similarity (SSIM): measures exact pixel match

- VGG Cosine Similarity: measures perceptual similarity based on similarity of VGG features

- Dyanimcs-based:

- Frechet Video Distance (FVD): compares quality of generated videos to ground-truth videos using features from video classification network

- Amazon Mechanical Turk (AMT): humans asked which of two videos were more realistic or if they looked about the same

- Frame-wise:

- Results summary:

- Largest SVG model performs best with FVD for Towel pick and Human 3.6M, while largest LSTM performs slightly better for KITTI

- Performance incresases with model capacity for all models (SVG, LSTM, CNN)

- CNN, without recurrence, does poorly overall

- Humans prefer predictions from larger models, but it also seems like LSTM predictions are more realistic than SVG

- SVG and LSTM perform similarly based on frame-wise metrics, with SVG slightly outperforming for longer rollouts

Conclusion

- Verifies the intuition and general trend that larger capacity networks perform better, given sufficient training data

- Possible to improve even more by training on higher resolution images

- While they compare to ablations it would have been interesting to see how architectures with additional inductive biases compare, especially with respect to number of parameters

- The poor performance without recurrence might motivate the use of object-centric dynamics models, which seem to perform reasonably without recurrence