A critique of pure learning and what artificial neural networks can learn from animal brains

Zador, 2019

Source: Zador, 2019

Source: Zador, 2019Summary

- The revolution in AI has been fueled by supervised learning with artificial neural networks (ANNs) while it is believed that animals mainly rely on unsupervised learning

- However, most of animal behavior might not be the result of any learning algorithm, but rather encoded in the genome

- The compression of information in the genome can be leveraged for rapid learning in ANNs

- Links: [ website ] [ pdf ]

Background

- Despite rapid advances in AI, current systems are far from approaching human intelligence, espeically in areas of language, reasonsing, and common sense

- “The deliberate process we call reasoning is, I believe, the thinnest veneer of human thought, effective only because it is supported by this much older and much more powerful, though usually unconscious, sensorimotor knowledge.” - Hans Moravec

- Learning by ANNs:

- “Learning” refers to extracting statistical regularities from data and encoding them into the parameters of a network

- Much progress attributed to increase in raw compute power and large datasets, resulting in less human intervention (i.e. feature engineering)

- “Bias-variance tradeoff” explains the trend of larger networks paired with larger datasets

- Learning in animals:

- “Learning” refers to a long-lasting change in behavior as a result of the organism’s experience

- Children receive limited labeled data, but large amounts of raw sensory data

Methods

- How do animals function so well so soon after birth?

- Exploit powerful unsupervised learning algorithms

- Much of their sensory representation and behavior is innate (primary view of the author)

- Courtship rituals and burrowing as examples of complex innnate behaviors

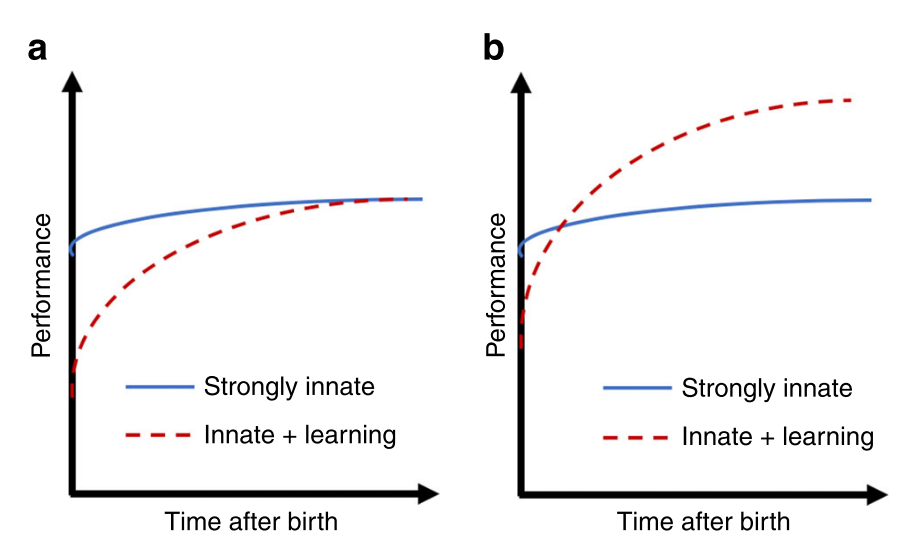

- Performance achievable from innate mechanisms might differ from what is achievable with additional learning, espeically if environment changes rapidly

- Results in tradeoff between innate and learned behavioral strategies, similar to bias-variance tradeoff

- Syngergy between mechanisms - e.g. use of place cells in the represenation of space in rodents, preference towards faces in primates

- These innate mechanisms are encoded in the genome as “blueprints” for wiring the nervous system

- Capcity of genome is too small to specify every connnection in larger brains

- Instead encodes a set of rules that specify the wiring pattern (e.g. speculation that neocortex consists of repeated “canonical” microcircuit)

Results

- Two nested optimization processes in animals:

- outer “evolution” loop acting on a generational timescale - acts indirectly on the brain through wiring rules, which enable learning specific things quickly, encoded in the genome

- inner “learning” loop acting within a lifetime of an individual - acts directly on synaptic weights in the brain

- Limited size of genome may act as a regularizer

- Genome size varies widely accross species, with many fish having much larger genomes than humans

- Currently, supervised learning in ANNs attempt to model both processes jointly

- Instead could focus on learning network architectures instead of specific weight matrices, as a more general form of transfer learning (i.e. meta-learning)

Conclusion

- There is still much that AI can learn from the brain, espeically with regards to learning generic wiring rules (network architectures)

- The characteristics desired of an intelligent machine are tightly constrained to match humans, suggesting that only a machine structured similarly can achieve it

- Although both planes and birds fly while being designed very differently, the same reasoning does not necessarily apply to AI systems and the human brain

- Just as how planes can fly much faster and higher than birds, but cannot catch fish in a river, computers already exceed humans at some tasks, but are far behind on the specialized set of tasks associated with general intelligence