ConvNets and ImageNet Beyond Accuracy: Understanding Mistakes and Uncovering Biases

Stock and Cisse, 2018

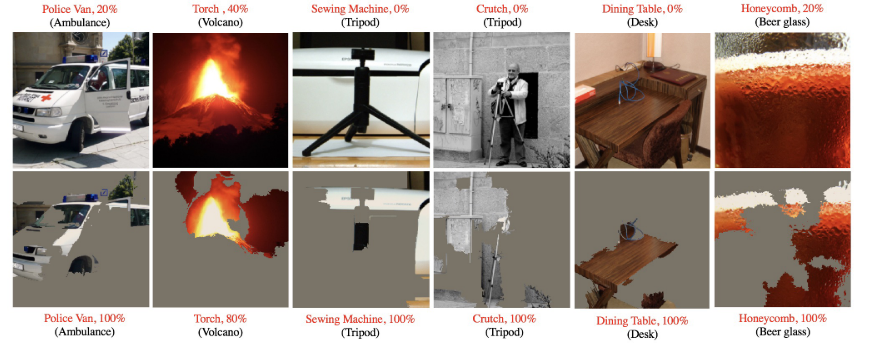

Source: Stock and Cisse, 2018

Source: Stock and Cisse, 2018Summary

- The slowdown in progress of CNNs applied to ImageNet, combined with their lack of robustness and tendency to exhibit undesirable biases, has raised questions about their reliability

- Perform human subject studies using explantions of the model

- Shows that explantions can mitigate impact of adversarial examples to end-users and uncover undesirable biases

- Links: [ website ] [ pdf ]

Background

- ImageNet has been a popular measure of progress in computer vision, with many methods using pre-trained ImageNet features

- While accuracy on ImageNet has improved, models still exhibit lack of robustness and undesirable biases

- Human studies yield insights into the quality of a model’s prediction from the perspective of the end-user

- Related to a later study by Beyer et al., 2020, which also supports the claim that ImageNet accuracy may be an underestimate of model quality due to incorrect ground truth labels

Methods

- Feature-based Explanation (LIME): highlights the super-pixels with positive weights towards a specific class

- Model Criticism: use prototypes and criticisms to represent model’s distribution

- MMD: minimizing the maximum mean discrepancy

- Adversarial selection: prototype from examples not misclassified after 10 IFGSM steps, criticisms from example misclassified after 1 IFGSM step

- Present misclassified examples and their predicted labels to participants, asked whether predicted class “is relevant for this image”

- Generate adversarial examples with 20 steps of IFGSM, non-targeted

- First condition: present full adversarial image

- Second condition: show only top eight most important features from LIME

- Present participant with six classes represented by six images each and a target sample image, asked to assign target sample to correct class

- Either use six prototypes or three prototypes and three criticisms

Results

- For ~40% of examples misclassified by ResNet-101, all five participants agree with the model’s prediction, due the ImageNet labeling procedure which ignores the mutlilabel nature of the images

- When full adversarial images are presented, participants agree with model 22% of the time

- When only explanation is shown, agreement increases to 31%

- Examining cases when participants agree with model when explanation is shown but not for the whole image shows that the explanation either reveals the object supporting the prediction or creates an ambiguous context that makes prediction plausible

- Using critcs in addition to prototypes increases success rate by a few percentage points

- Adversarial selection performs slightly better than MMD, probability-based selction, and random selction

- For the

basketballclass, prototypes are more likely to contain a black person while criticisms are more likely to contain a white person- Same type of bias exists for other classes too, e.g.

traffic light

- Same type of bias exists for other classes too, e.g.

Conclusion

- Provides additional evidence that the mutlilabel nature of ImageNet images makes evaluating accuracy gains less straightforward

- Demonstrates that providing explantions and model criticism can be useful tools to improve the reliability of these models for end-users