Adversarial Examples that Fool both Computer Vision and Time-Limited Humans

Elsayed et al., 2018

Source: Elsayed et al., 2018

Source: Elsayed et al., 2018Summary

- Aims to answer whether humans are prone to similar mistakes as computer vision models

- Leverage techniques that transfer adversarial examples to other models with unknown parameters and architecture

- Find that robust adversarial examples also affect time-limited humans

- Links: [ website ] [ pdf ]

Background

- Small, carefully designed perturbations to the input can cause machine learning models to produce the incorrect output, this is known as adversarial examples

- One phenomenon that has been observed is that adversarial examples often transfer across a wide variety of models

- While humans also exhibit errors to certain inputs (e.g. optical illusions), these generally don’t resemble adversarial examples

- Since humans are usually used as existence proof for AI algorithms, either:

- Humans resist this class of adversarial examples, and therefore it should be possible for machines too

- Humans are also affected, and therefore should maybe focus on making systems secure despite having non-robust machine learning components

- Notes about definition of adversarial examples:

- Designed to cause a mistake, not necessarily differ from human judgement

- Do not have to be imperceptible (to humans)

Methods

Machine Learning Vision Pipeline

- Combined ImageNet images into six coarse categories in three groups: Pets (dog, cat), Vegetables (broccoli, cabbage), Hazard (spider, snake)

- Train ensemble of 10 CNN models which were based on Inception and ResNet but had an additional retinal layer to better match early human visual processing (e.g. spatial blurring from foveation)

- Generate adversarial examples on these classifiers using gradient descent with new target class, with max perturbation on a single pixel constrained by

Psychophysics Experiments

- 38 subjects sat in fixed chair and asked to classify images as one of two classes (two alternative forced choice)

- After short fixation period, image that spanned

- Participants had up until 2200 ms after mask was turned off to respond, time pressure helps ensure that even subtle effects on perception are detectable

- Each session only included one of the image groups (Pets, Vegetables, Hazard) with images in one of four conditions:

image: images from ImageNet rescaled to [40, 215] to prevent clipping when perturbations are addedadv: images with adversarial perturbationsflip: images withfalse: images from ImageNet outside of the two classes in the group, but perturbed towards one of the classes in the group,

Results

- Adversarial examples tested on two new models successful for

advandfalseconditions, whileflipimages had little effect (validating its use as control) - In the

falsecondition, adversarial perturbations successfully biased human decisions in all three groups by ~1-5% and response time was inversely correlated to the perceptual bias pattern - In the other setting, humans had similar accuracies on

imageandflipconditions while being ~7% less accurate onadvimages

Conclusion

- Without a time limit humans still get the correct class, suggesting that:

- The adversarial perturbations do not change the “true class”

- Recurrent connections could add robustness to adversarial examples

- Or that adversarial examples do not transfer well from feed-forward to recurrent networks

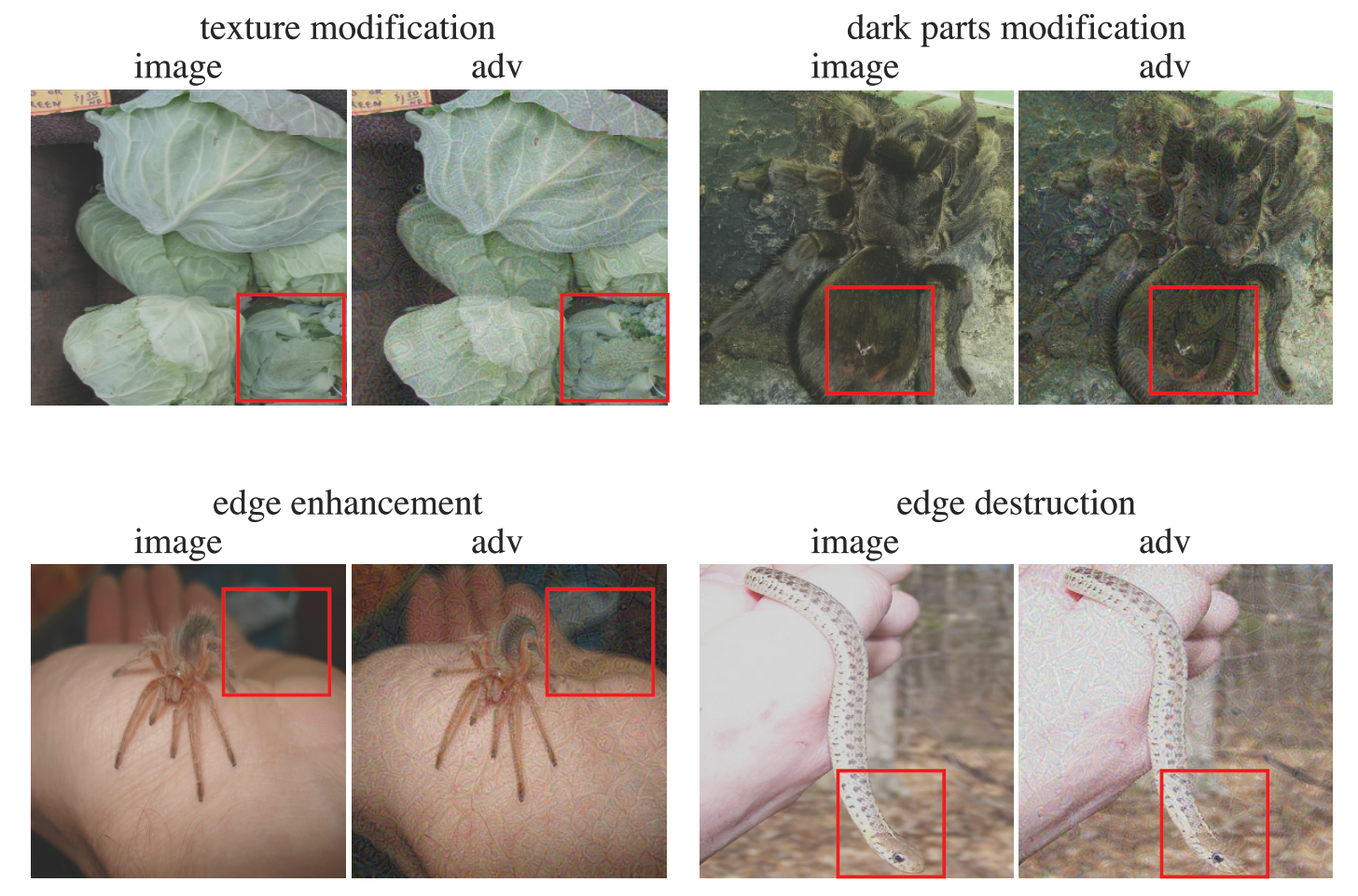

- Qualitatively, perturbations seem to work by modifying object edges, modifying textures, and taking advantage of dark regions (lager perceptual change for given size perturbation)

- Results open up a lot of questions for further investigation:

- How does transfer depend on

- Can retinal layer be removed?

- How crucial is the model ensembling?

- How does transfer depend on